Recent & Upcoming Talks

2025

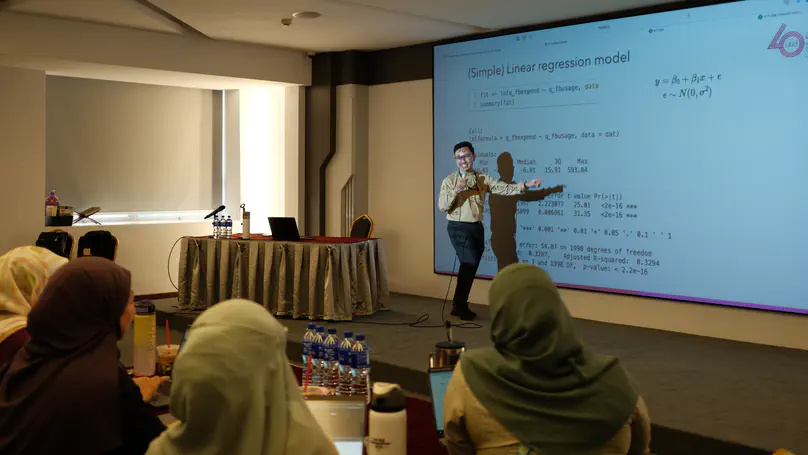

Welcome to our introductory session on R software and its powerful ecosystem. Learn how R can be used to produce compelling data products, such as reports with rich tables and visualizations. We’ll take a hands-on approach and even explore how to recreate parts of the AITI ICT Survey Report 2022 using R and Quarto.

2024

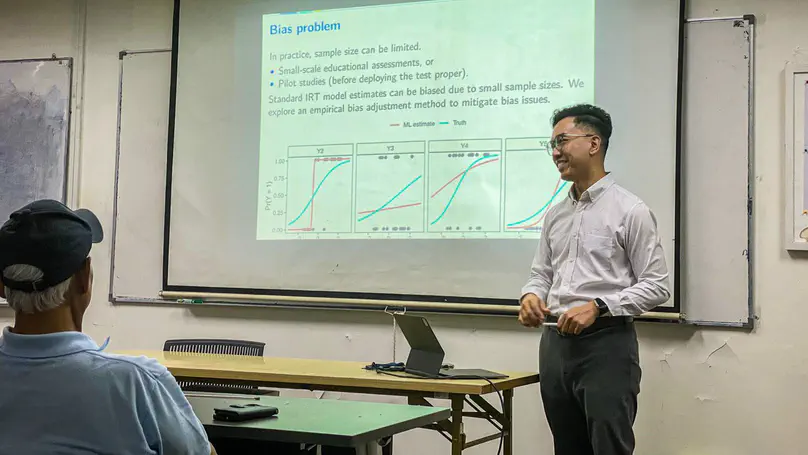

In this talk, we will explore the concept of Item Response Theory (IRT), a powerful method used to understand how different test items (questions) work in educational assessments. IRT helps educators and researchers measure students’ abilities more accurately by looking at how they respond to various test items. We will introduce the basics of IRT, explaining how it can be applied in the context of educational testing, and how it provides valuable insights into both the test questions and the students taking the test. The talk will demonstrate how to fit IRT models using R software, making it easier for attendees to apply these techniques in their own work. Additionally, we will discuss methods for reducing bias in IRT models, especially in situations where small sample sizes might otherwise lead to inaccurate results. This presentation introduces a method to correct this bias by using empirical-based adjustments. Our approach is simple and significantly improves the accuracy of IRT model results, making it valuable for both researchers and practitioners. The method can be easily applied and offers a straightforward alternative to more complex techniques. Simulation studies show that our method effectively reduces bias, leading to more precise and reliable measurements in psychometric assessments.

In the field of psychometrics, the accuracy and reliability of measurement tools are paramount, particularly when employing Item Response Theory (IRT) models for assessing latent psychological traits. A persistent challenge in this domain is the non-zero bias of order $O(1/n)$ in finite sample sizes, a problem aggravated by deviations from the latent normality assumption, such as excess zeroes or skewed distributions. This presentation introduces an empirical bias adjustment method designed to mitigate this problem. The method applies adjustments derived from the empirical approximation of bias through higher-order derivatives of the estimating functions. Our simple approach offers a promising avenue for enhancing the robustness of IRT model estimations, especially in samples that deviate from idealized assumptions. The method’s theoretical advantages include markedly improved accuracy of estimator recovery, rendering it an invaluable asset for both researchers and practitioners. The innovation lies in its straightforward adjustment process, which can be implemented via implicit (i.e. solving adjusted estimating equations) or explicit methods (i.e. adjusting original estimators), thus streamlining the adoption and offering an appealing alternative to existing, more complex bias-reduction techniques. Validation of our theoretical framework through simulation studies confirms the effectiveness of our empirical bias adjustment in reducing parameter bias, thereby enabling more precise and dependable psychometric measurements.

2022

2021

2020

Soldiers are expected to perform complex and demanding tasks during operations, often while carrying a heavy load. It is therefore important for commanders to understand the relationship between load carriage and soldiers’ performance, as such knowledge helps inform decision-making on training policies, operational doctrines, and future soldier systems requirements. In order to investigate this, repeated experiments were conducted to capture key soldier performance parameters under controlled conditions. The data collected was found to contain missing values due to dropouts as well as non-measurement. We propose a Bayesian structural equation model to quantify a latent variable representing soldiers’ abilities, while taking into consideration the non-random nature of the dropouts and time-varying effects. This talk describes the modelling exercise conducted, emphasising the statistical model-building process as well as the practical reporting of the outputs of the model.

Soldiers are required to perform tasks that call upon a complex combination of their physical and cognitive capabilities. For example, soldiers are expected to communicate effectively with each other, operate specialised equipment, and maintain overall situational awareness–often while carrying a heavy load. From a planning and doctrine perspective, it is important for commanders to understand the relationship between load carriage and soldiers’ performance. Such information could help provide recommendations in advising future policies on training, operational safety, and future soldier systems requirements. To this end, the Royal Brunei Armed Forces (RBAF) conducted controlled experiments and collected numerous measurements intended to capture key soldier performance parameters. The structure of the data set provided several interesting challenges, namely 1) how do we define “performance”?; 2) how do we appropriately take into account the longitudinal nature of the data (repeated measurements)?; and 3) how do we handle non-ignorable dropouts? We propose a structural equation model to quantify a latent variable representing soldiers’ abilities, while taking into consideration the non-random nature of the dropouts and time-varying effects. The main output of the study is to quantify the relationship between load carried versus performance. Additionally, modelling the dropouts allow us to also determine “expected time to exhaustion” for a given load carried by a soldier.

2019

In statistical modelling, there is often a genuine interest to learn the most reasonable, parsimonious, and interpretable model that fits the data. This is especially true when faced with the oddly perplexing phenomenon of having “too much information” (data saturation). Model selection is indeed a vastly covered topic. In this talk, I will focus on the Bayesian approach to model selection, emphasising the selection of variables in a linear regression model. The outcome of the talk is three-fold: 1) To introduce the statistical framework for Bayesian variable selection; 2) to understand how we can use model probabilities as a basis for model selection; and 3) to demonstrate its application using real-world data (mortality and air pollution data). The hope is that the audience will gain an understanding of the method to possibly spur on further research and applications in their respective work."

2018

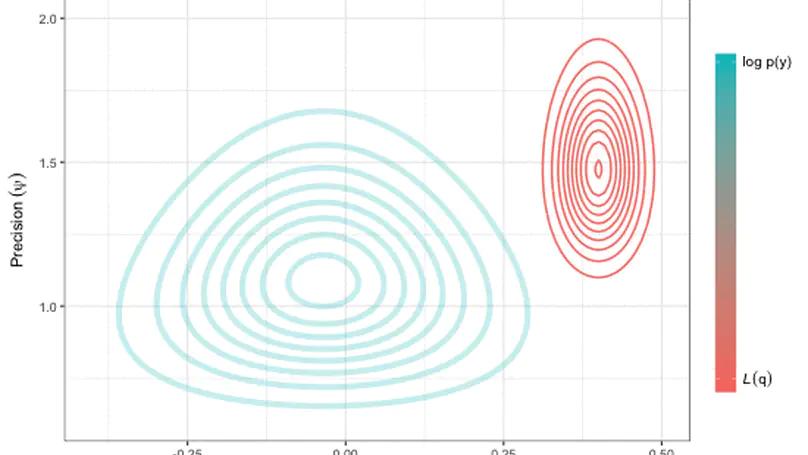

The fitting of complex statistical models that consists of latent or nuisance variables, in addition to various parameters to be estimated, likely involves overcoming an intractable integral. For instance, calculating the likelihood of such models require marginalising over the latent variables, and this may prove to be difficult computationally, either due to dimensionality or model design. Variational inference, or variational Bayes as it is also known, offers an efficient alternative to Markov chain Monte Carlo methods, the Laplace approximation, and quadrature methods. Rooted in Bayesian inference and popularised in machine learning, the main idea is to overcome the difficulties faced by working with “easy” density functions in lieu of the true posterior distribution. The approximating density function is chosen so as to minimise the (reverse) Kullback-Leilber divergence between them. The topics that will be discussed are mean-field distributions, the coordinate ascent algorithm, and approximation properties, with an example following. The hope is that the audience will gain a basic understanding of the method to possibly spur on further research and applications in their respective work.

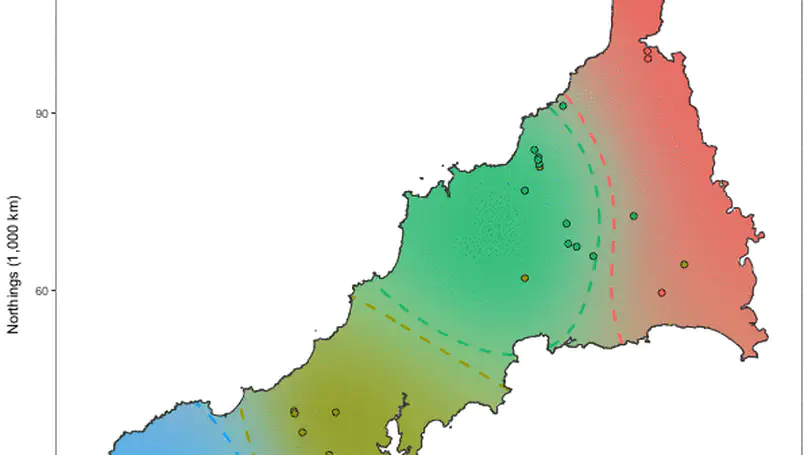

In a regression setting, we define an I-prior as a Gaussian process prior on the regression function with covariance kernel equal to its Fisher information. We present some methodology and computational work on estimating regression functions by working in the appropriate reproducing kernel Hilbert space of functions and assuming an I-prior on the function of interest. In a regression model with normally distributed errors, estimation is simple—maximum likelihood and the EM algorithm is employed. In the classification models (categorical response models), estimation is performed using variational inference. I-prior models perform comparatively well, and often better, to similar leading state-of-the-art models for use in prediction and inference. Applications are plentiful, including smoothing models, modelling multilevel data, longitudinal data, functional covariates, multi-class classification, and even spatiotemporal modelling.

Estimation of complex models that consists of latent variables and various parameters, in addition to the data that is observed, might involve overcoming an intractable integral. For instance, calculating the likelihood of such models require marginalising over the latent variables, and this may prove to be difficult computationally—either due to model design or dimensionality. Variational inference, or variational Bayes as it is also known, offers an efficient alternative to Markov chain Monte Carlo methods, the Laplace approximation, and quadrature methods. Rooted in Bayesian inference and popularised in machine learning, the main idea is to overcome the difficulties faced by working with “easy” density functions in lieu of the true posterior distribution. The approximating density function is chosen so as to minimise the (reverse) Kullback-Leilber divergence between them. The topics that will be discussed are mean-field distributions, the coordinate ascent algorithm, and its properties, with examples following. The hope is that the audience will gain a basic understanding of the method to possibly spur on further research and applications in their respective work.

2017

An extension of the I-prior methodology to binary response data is explored. Starting from a latent variable approach, it is assumed that there exists continuous, auxiliary random variables which decide the outcome of the binary responses. Fitting a classical linear regression model on these latent variables while assuming normality of the error terms leads to the well-known generalised linear model with a probit link. A more general regression approach is considered instead, in which an I-prior on the regression function, which lies in some reproducing kernel Hilbert space, is assumed. An I-prior distribution is Gaussian with mean chosen a priori, and covariance equal to the Fisher information for the regression function. By working with I-priors, the benefits of the methodology are brought over to the binary case - one of which is that it provides a unified model-fitting framework that includes additive models, multilevel models and models with one or more functional covariates. The challenge is in the estimation, and a variational approximation is employed to overcome the intractable likelihood. Several real-world examples are presented from analyses conducted in R.

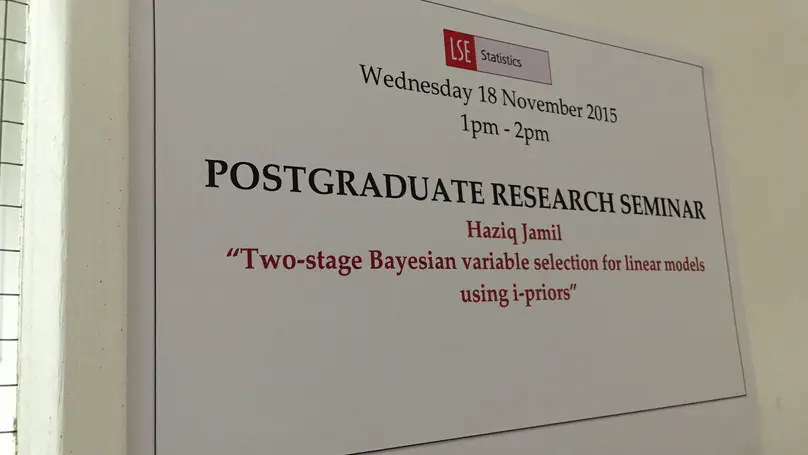

2015

In a previous work, I showed that the use of I-priors in various linear models can be considered as a solution to the over-fitting problem. In that work, estimation was still done using maximum likelihood, so in a sense it was a kind of frequentist-Bayes approach. Switching over to a fully Bayesian framework, we now look at the problem of variable selection, specifically in an ordinary linear regression setting. The appeal of Bayesian methods are that it reduces the selection problem to one of estimation, rather than a true search of the variable space for the model that optimises a certain criterion. I will talk about several Bayesian variable selection methods out there in the literature, and how we can make use of I-priors to improve on results in the presence of multicollinearity.

The I-prior methodology is a new modelling technique which aims to improve on maximum likelihood estimation of linear models when the dimensionality is large relative to the sample size. By putting a prior which is informed by the dataset (as opposed to a subjective prior), advantages such as model parsimony, lesser model assumptions, simpler estimation, and simpler hypothesis testing can be had. By way of introducing the I-prior methodology, we will give examples of linear models estimated using I-priors. This includes multiple regression models, smoothing models, random effects models, and longitudinal models. Research into this area involve extending the I-prior methodology to generalised linear models (e.g. logistic regression), Structural Equation Models (SEM), and models with structured error covariances.