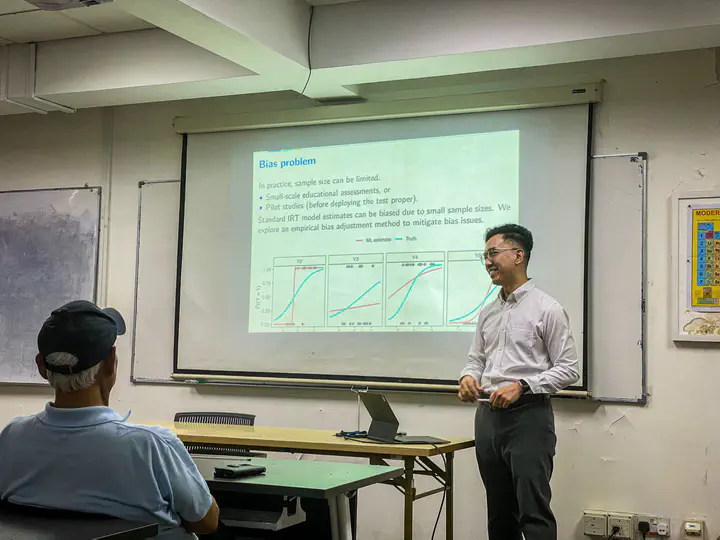

Item Response Theory (IRT) models: Reducing bias in small samples

Abstract

In this talk, we will explore the concept of Item Response Theory (IRT), a powerful method used to understand how different test items (questions) work in educational assessments. IRT helps educators and researchers measure students’ abilities more accurately by looking at how they respond to various test items. We will introduce the basics of IRT, explaining how it can be applied in the context of educational testing, and how it provides valuable insights into both the test questions and the students taking the test. The talk will demonstrate how to fit IRT models using R software, making it easier for attendees to apply these techniques in their own work. Additionally, we will discuss methods for reducing bias in IRT models, especially in situations where small sample sizes might otherwise lead to inaccurate results. This presentation introduces a method to correct this bias by using empirical-based adjustments. Our approach is simple and significantly improves the accuracy of IRT model results, making it valuable for both researchers and practitioners. The method can be easily applied and offers a straightforward alternative to more complex techniques. Simulation studies show that our method effectively reduces bias, leading to more precise and reliable measurements in psychometric assessments.